Nomad by HashiCorp is a simple workload orchestrator we use at openFORCE to manage our container based applications in our cloud environment. To me it is an excellent - and by far easier to maintain - alternative to Kubernetes. Currently we run about 25 applications in our cluster and new applications and services are added regulary.

How does Nomad operate?

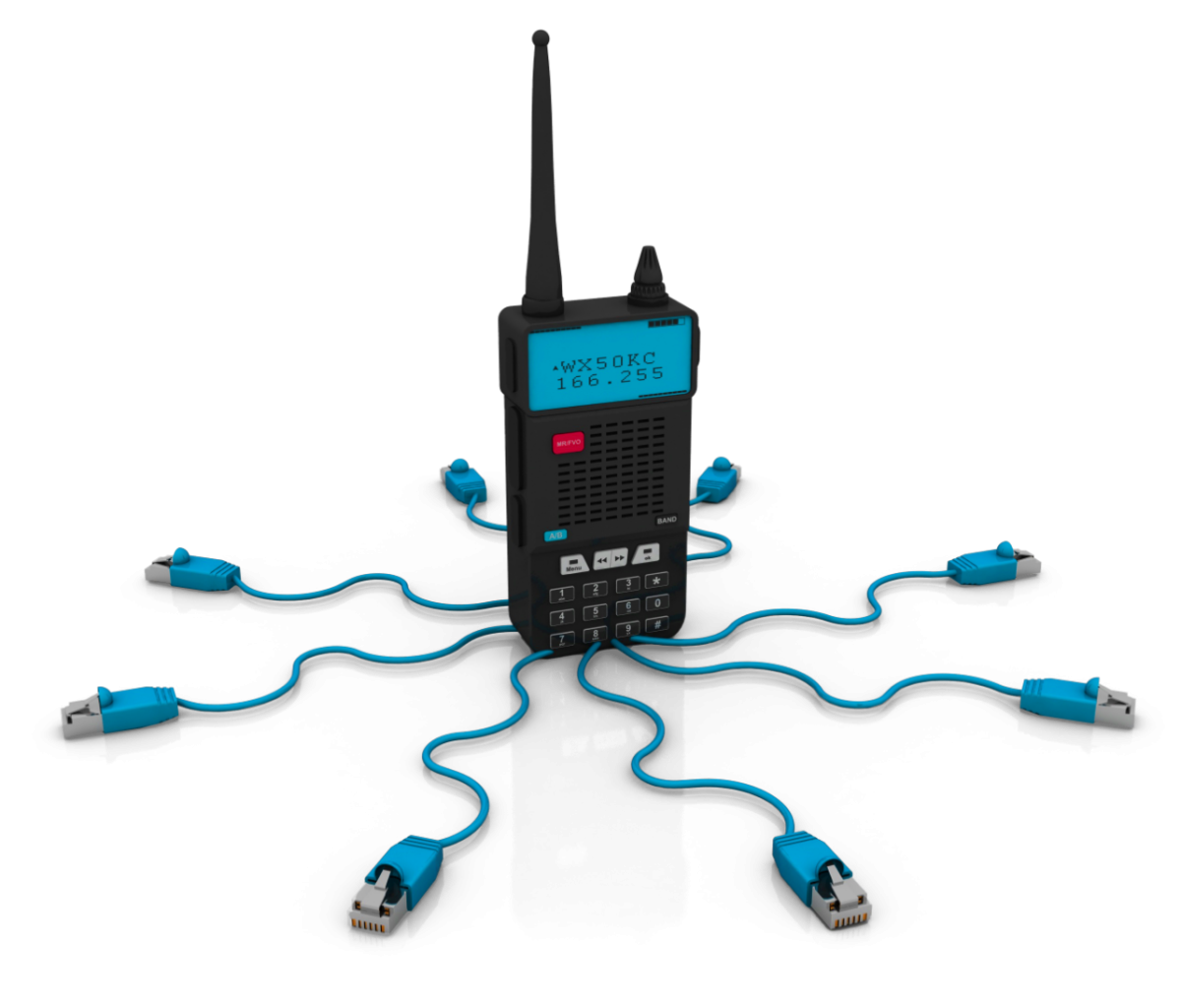

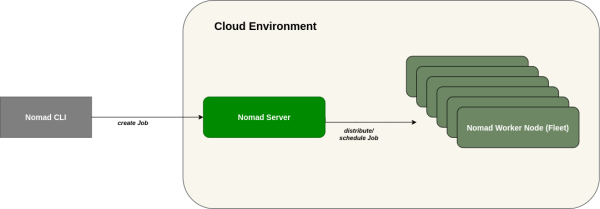

To give you a brief overview about how Nomad works I prepared a simple image that shows you how Nomad operates. HashiCorp provides us also with detailed use cases which you can find here.

Nomad jobs are distributed based on the available resources across the running Nomad agents (we call them fleet nodes at openFORCE). This is a nice feature and the basic idea behind Nomad job scheduling. A job is created by the Nomad command line tool that interacts with the Nomad server. The server knows the state of the complete cluster with all current allocations and free resources regarding CPU and memory and decides where the job will be placed in the fleet.

This is a workable solution for services which do not need persistent storage. These transient services can be moved from one fleet node to another without worrying about the actual physical location of the service.

What about other services which need to write persistent data to some storage location? Think of databases or fileservices for example.

The concept of "host volumes"

Nomad has introduced the concept of "host volumes" in version 0.12 which enables us to specify a local directory to be mounted for our container (think of a docker volume). Of course these host volumes are connected to a host as the name already suggests. The host volumes need to be specified in the Nomad client configuration on each Nomad fleed host. A job can request a host volume. As a result Nomad will place that job on the certain host(s) where this volume is available. If you need further guidance I strongly advise you to have a look into the available Nomad Tutorials.

Tagging with Nomad

So far so good. Normally you want to have some nodes that are exclusively reserved (because they are optimized) for - let's say - database services. You don't want arbitrary jobs in the Nomad cluster to be deployed on these nodes. This is where tags come to the rescue. We can use a tag as a constraint in the Nomad job description. If you want transient services to not be deployed on a database node we can use a tag "transient" in all Nomad client configurations where we accept transient services to be deployed. Database (or ther special Node agents) will not have the Tag "transient" assigned to them. So transient services will never be deployed on them.

This is quite similar to the tag meta information use by GitLab runners for example.

The rest of the Nomad node configuration for these special services can be identical to the other Nomad fleet nodes.

Alternative approaches

An alternative approach would be to use seperate Nomad clusters for "special" service nodes and one cluster for all the services that don't need any special preparation. This approach is used at Trivago as they also use Nomad as their deployment target. But this approach has a drawback in which you need additional servers (at least 3 more nodes for a second Nomad cluster) and this is a bit of an overhead for smaller Nomad clusters. So we at openFORCE are using the Tag based scheduling and are very happy with this approach.

If you have questions regarding our Nomad featured software solutions or would like to share your thoughts - feel free to contact us directly or on Social Media!

Title image © HashiCorp